Thoughts on Vibe Coding with AI

20 April 2025 at 11:00 am

In my feed, there keeps popping up stories about “Vibe coders”. At Bitraf there is currently an influx of new members that never would code unless AI enabled them to do so. These are “hardware vibe coders” that trust that AI can help them solve hardware problems. Is AI coding for either software or hardware currently viable? And is Vibe-coding possible beyond the first prototype?

Vibe-coders code without knowledge, but rather through “vibe”. As a seasoned programmer, it's amusing to read articles like this, where a tech journalist with no coding background proudly shows off that he's been able to make a few extremely simple projects.

He boasts that his website now looks better than it did using Wordpress. Given that it's an extremely basic vcard-style website that is not updatable, it's not hard to understand how he would think that this might be better. If he's formerly struggled with HTML and the ambition is to simply get a webpage online, this really is the way to go. No subscription service, not keeping the site safe, no pesky content updates. AI is a perfectly good way to generate a static single page site. His other projects also show a similar ambition - to make extremely simple apps that you could whip up in 30 minutes if you knew Javascript. It's just one problem - if anything is wrong with any of these, he wouldn't have any clue as to fixing it as.

Can you fix broken AI-code?

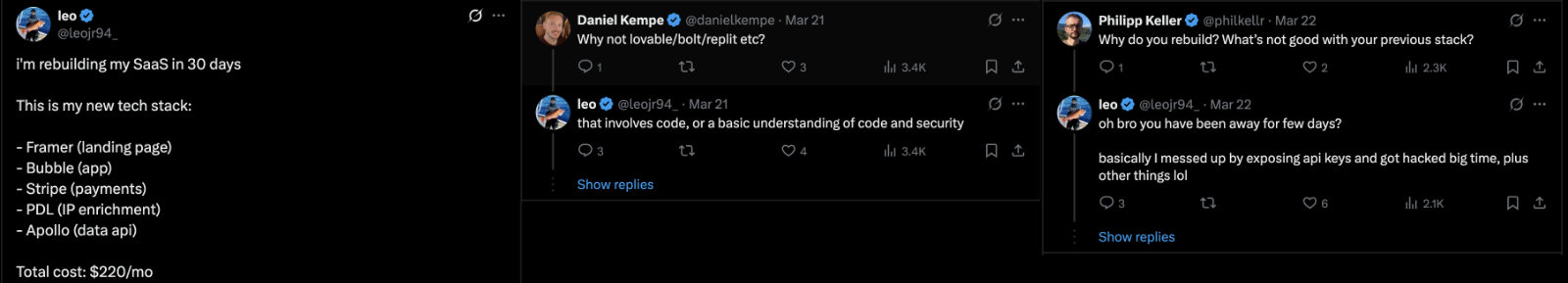

AI opens up programming to people that never wrote a program. This is great for those users and they truly feel accomplished when they manage to get something to run. The problem here is that the more advanced task you solve with AI - the lower the chance you have of ever fixing something going wrong. The X user @leojr94 painfully experienced this after boasting online that he built a commercial service using the AI service Cursor. Fireship has a great video highlighting how bad that went. TL;DR he got hacked and never managed to get either his customers or data back, but feel free to watch this unfold again as he now tries to pay for security to prevent being hacked again?

Today it's been 30 days and the new service isn't launched yet but I'll check by to see if he can get it going. Without even basic programming knowledge, you either have to pay someone or you have to know a kind & smart person that has skills beyond what an AI service currently can offer.

At Bitraf, we now see a new type of members joining. It's Vibe Coders that have used AI to solve hardware problems. They whip up the recepie for a prototype with AI in just seconds. The AI carefully builds up an answer by using knowledge (or literally stealing/copying) from tutorials and blogs. The end user is then stumped since they don't have access to the exact hardware components that the AI suggested. Since they don't know anything about electronics, it's extemely hard to find a suitable replacement component, but why not just ask the AI for that? And within just a few extra questions for the AI, the context window is exceeded and the AI is giving out random advice without remembering what the original recommendation it gave was.

At Makerspaces/Hackerspaces such as Bitraf, there's always a seasoned user with real programming or hardware experience that can help them when they're stuck. But by themselves, they don't have a chance at solving any problems arising. They just don't have the skills and the current AI systems don't have the context required. Even when you bypass the free GPT offerings and pay real money, the context is limited. The free versions of ChatGPT and Gemini just can't give away the required compute & memory.

Context is key for AI to write real apps

But is it really so that AI isn't useful for programming? Absolutely not, but it's all a question of ambition and scale. Current models are completely unable to maintain a large context in memory. It's simply too expensive at the current state. You can pay to expand the context, but I have yet to see a tool that could hold the context for even a simple project of the kind I'm working on daily. I have not seen many good and hype-free estimates for when AI will be truly useful, but this interview from the Joe Rogan Experience with Ray Kurtzweil is a really good watch (full version here). Here, Ray points out that this is all fundamentally limited by hardware that is improving at a fairly fixed scale, close to Moores Law. In 10 years time, when compute and memory is half the price, we'll see huge advances in AI. Maybe even sentient AI's close to 2040? It seems playsible.

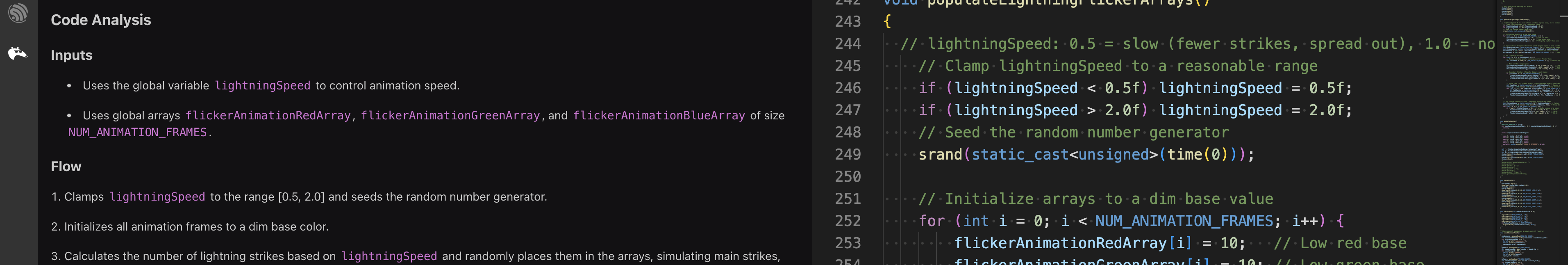

AI is however fully usable already - if you just understand the limitations. I recently worked on making a convincing lightning effect for an installation. After using a couple hours trying to animate this with code, I didn't have something looking convincing enough. I then gave Grok a very specific query where I described the effect I wanted, the programming language to use, the name and structure of all variables that I wanted to use. Grok was able to look up information on how real lightning worked, abstract that into code and then gave me two CPP functions. One for setting up and randomizing the effect and one for playing it back. I specifically sked for the effect to be filled into arrays, so I could play it back at any speed I wanted. This task was simple enough that I could get Grok to tweak the effect to make it scalable, so I could adjust the duration of the lightning and after-flashes.

This was my first really useful AI generated code. Since I knew enough about programming to describe how I wanted it, both structure and feature wise, I can easily maintain this code. This is currently a good way to use AI to write code, but anything beyond specific features in an App and it won't work. I also use the AI tool Codium (now Qodo) as an extension in VSCode when writing apps. This makes it faster to write the initial code, but once I've written 80% of the code, I'll turn the AI tool off. At that point, it's more in the way than helping.

As seasoned programmers know, you'll write 80% of the code in 20% of the time. The remaining 80% of the time is in the detail of the remaining 20% and AI won't help you there. The reason is simply that it cannot hold a big enough context in memory. Once larger contexts arrive that han hold a significant part of a program in memory, programming will change significantly. Faster processors will make learning cheaper as well. We're certainly not there yet, but maybe in 3-5 years? For now, AI has too many basic problems it needs to solve.

When will AI learn to count?

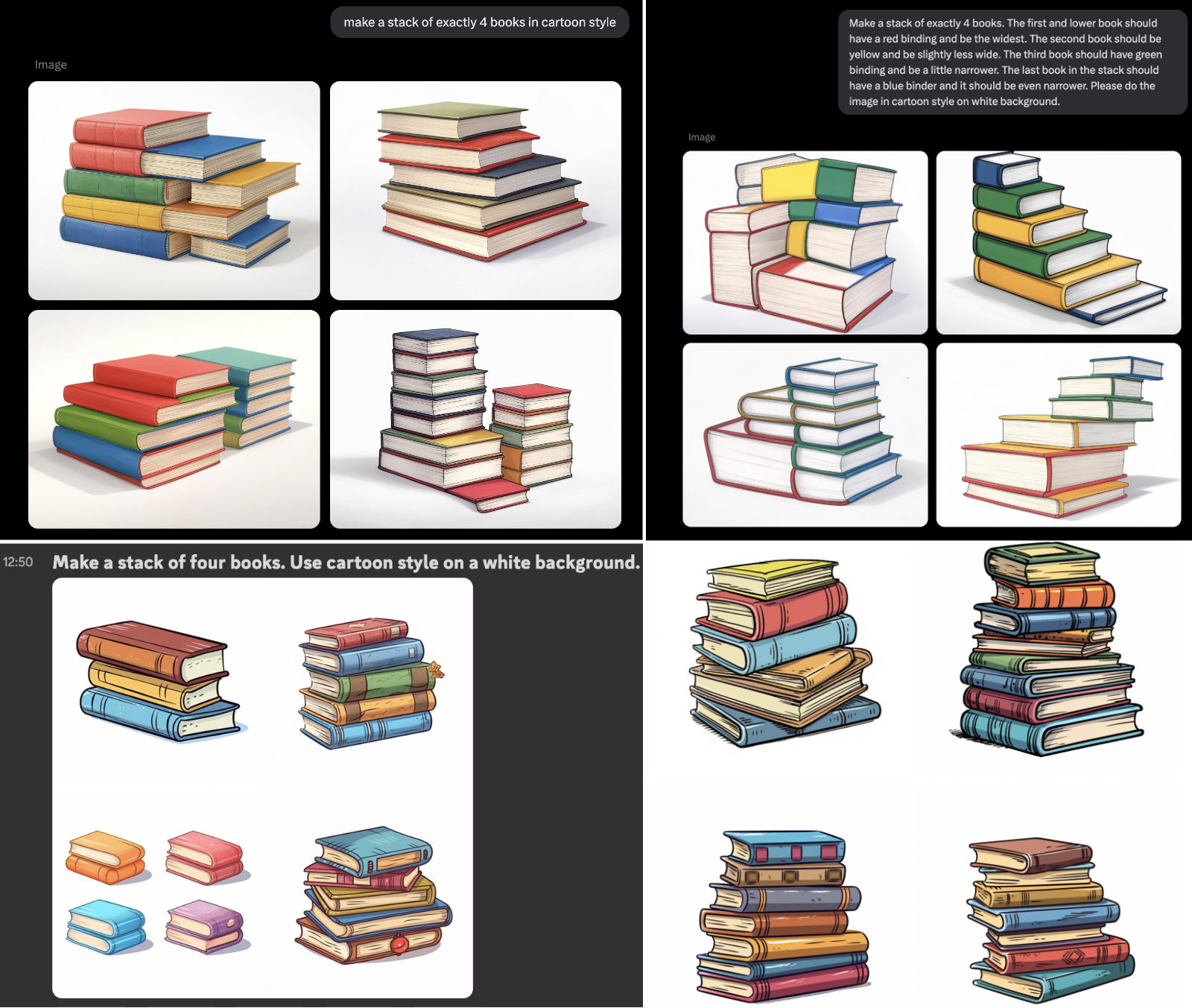

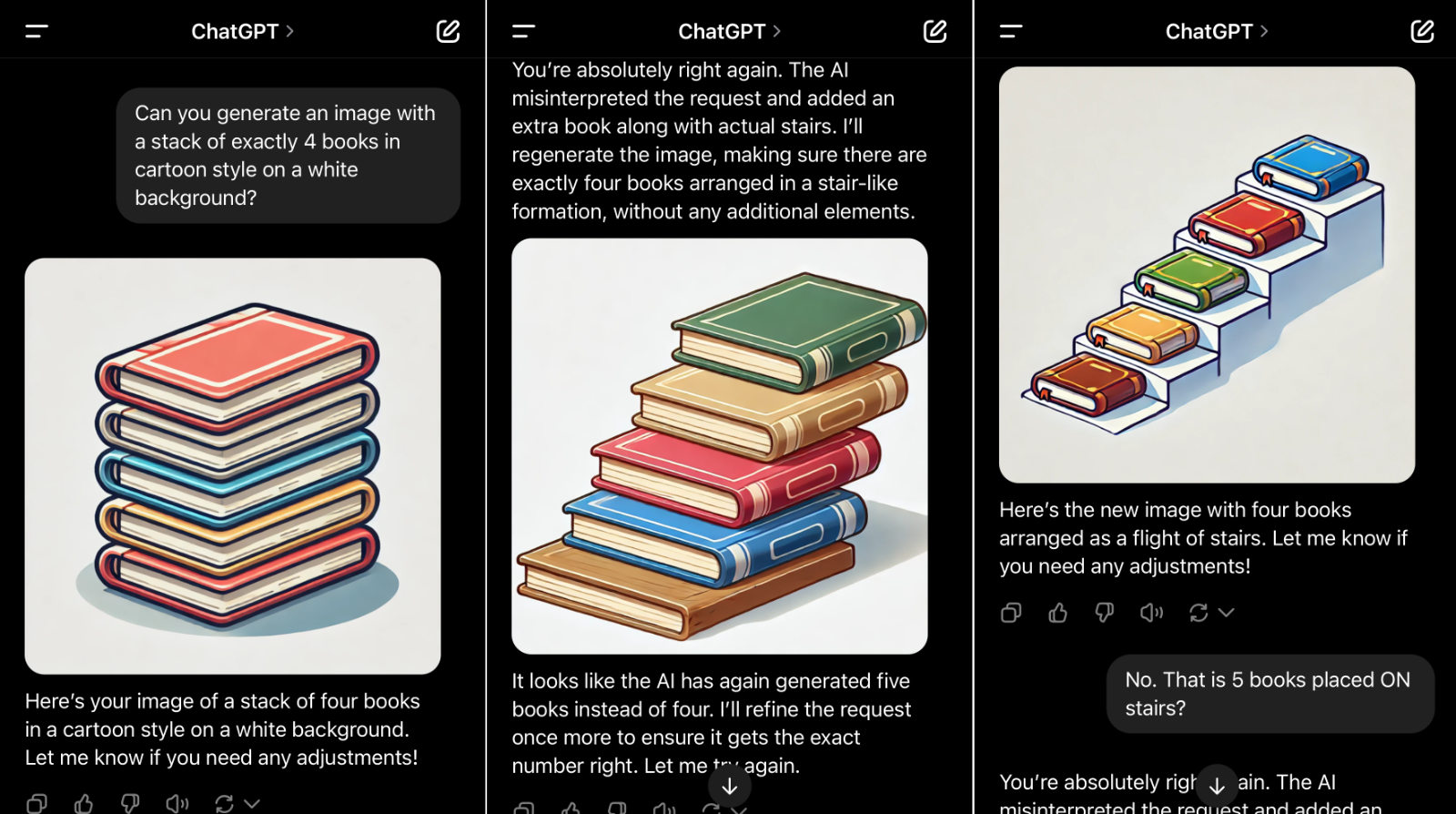

Apart from testing AI for programming, I also play around with AI for image generation. It's very easy to generate amazing looking images, but it still severely limited what you can do that is really useful. In this case it's not the context-size that is the problem. It's rather what the basis for the image generation is. For my post on "The stairs of Knowledge", I needed a picture showing a stack of books. I wanted the books organized, so they looked like a flight of stairs with 4 steps. I tried generating the image using both ChatGPT, Midjourney and Grok. They all failed the same way, but here's how my dialogue with ChatGPT went:

Simple requests where the AI likely can find enough references online will work. Making a stack of books is easy, but counting them is currently impossible. The current generation of AI image tools simply don't have that ability. You MAY get lucky one in 10 attempts, but that just random luck and it's impossible to repeat an image with some extra tweaks. If you want the pile of books organized in any way not typically found in online pictures, you're out of luck. It's just not possible. Apparently, the latest edition of ChatGPT has now solved some of these issues by changing image generation to use a textual context rather than noise as the basis, but I'm yet to test that out. I already pay for Grok, so I'm not currently in a hurry to pay for more services.

Below are the attempts from Grok and Midjourney at making a stack of 4 books. Grok (the two upper) has the slimmest understanding of physics where books go into other books. It did however ALMOST make a good version (top forar right) except that it used 6 books rather than 4 as requested. I guess I'll revisit this one as well in a year or two, or do you think it'll go faster?